Web scraping, a technique employed to extract large amounts of data from websites, has become a crucial tool in the digital age. Whether for academic research, market analysis, or content aggregation, the ability to programmatically collect data from the web opens up a world of possibilities. One of the most popular libraries for web scraping in Python is BeautifulSoup. This article explores the ins and outs of web scraping using BeautifulSoup, providing a comprehensive guide for beginners and seasoned developers alike.

Crafting Web Wonders: Building a Web Application with Spring Boot in Java

What is Web Scraping?

Web scraping involves the use of automated bots to visit web pages, extract information, and convert it into a usable format. The data collected can be stored in databases, spreadsheets, or other formats for further analysis and application. Unlike traditional data collection methods, web scraping can handle vast amounts of data with relative ease and efficiency.

Introduction to BeautifulSoup

BeautifulSoup is a Python library designed for quick turnaround projects like web scraping. Created by Leonard Richardson and licensed under the MIT license, BeautifulSoup provides simple methods and Pythonic idioms for navigating, searching, and modifying parse trees. It can parse anything you give it and provides ways of navigating the parse tree, searching it, and modifying the tree.

BeautifulSoup is powerful because it allows you to scrape web content without needing to understand the complex and often inconsistent HTML structure of web pages. By abstracting these details, it makes web scraping more accessible to those with minimal coding experience.

Setting Up the Environment

Before diving into web scraping, it’s essential to set up your environment. You’ll need Python installed on your system. Additionally, BeautifulSoup requires a parser, and it’s common to use lxml or Python’s built-in html.parser.

Here are the steps to get started:

- Install Python: If you don’t have Python installed, download and install it from python.org.

- Install BeautifulSoup and Requests: BeautifulSoup works in conjunction with

requests, a Python library for making HTTP requests. Install these libraries using pip:bash pip install beautifulsoup4 requests lxml

Basic Usage of BeautifulSoup

BeautifulSoup works by parsing HTML or XML documents and creating a parse tree from it. Here’s a simple example to illustrate how to use BeautifulSoup to scrape a webpage.

Step 1: Making a Request

First, you need to make an HTTP request to the webpage you want to scrape. The requests library makes this easy:

import requests

url = 'http://example.com'

response = requests.get(url)

print(response.text)Step 2: Parsing the HTML

Once you have the HTML content, you can parse it using BeautifulSoup:

from bs4 import BeautifulSoup

soup = BeautifulSoup(response.text, 'lxml')

print(soup.prettify())Step 3: Navigating the Parse Tree

BeautifulSoup allows you to navigate the parse tree and extract the information you need. Here are some common methods:

- Finding Elements by Tag:

title = soup.title print(title.string) - Finding Elements by Attribute:

link = soup.find('a') print(link['href']) - Finding Multiple Elements:

python links = soup.find_all('a') for link in links: print(link['href'])

Example: Scraping Quotes from a Website

Let’s scrape a website that contains quotes to see BeautifulSoup in action. We’ll use a fictional URL http://quotes.toscrape.com.

- Make the Request:

url = 'http://quotes.toscrape.com' response = requests.get(url) soup = BeautifulSoup(response.text, 'lxml') - Extract Quotes:

quotes = soup.find_all('span', class_='text') for quote in quotes: print(quote.text) - Extract Authors:

python authors = soup.find_all('small', class_='author') for author in authors: print(author.text)

By following these steps, you can extract all quotes and their respective authors from the webpage.

Advanced Techniques

While the basics of web scraping with BeautifulSoup are straightforward, more complex tasks may require advanced techniques.

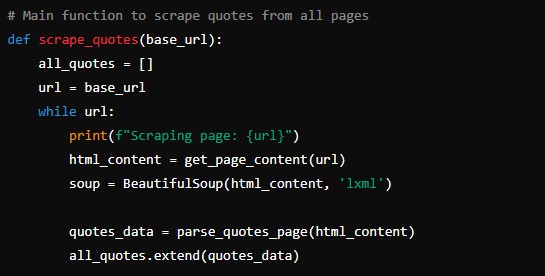

Handling Pagination

Many websites display data across multiple pages. To scrape such sites, you need to handle pagination. This usually involves finding the link to the next page and iterating over all pages.

import requests

from bs4 import BeautifulSoup

url = 'http://quotes.toscrape.com'

while url:

response = requests.get(url)

soup = BeautifulSoup(response.text, 'lxml')

quotes = soup.find_all('span', class_='text')

for quote in quotes:

print(quote.text)

next_page = soup.find('li', class_='next')

url = next_page.find('a')['href'] if next_page else NoneDealing with JavaScript-Rendered Content

BeautifulSoup cannot scrape content rendered by JavaScript. In such cases, tools like Selenium or Puppeteer, which control a web browser, can be used to render the page fully before scraping.

Throttling and Respecting robots.txt

To avoid overloading websites with requests, it’s important to add delays between requests and respect the robots.txt file, which specifies how bots should interact with the site.

import time

import requests

from bs4 import BeautifulSoup

url = 'http://quotes.toscrape.com'

while url:

response = requests.get(url)

soup = BeautifulSoup(response.text, 'lxml')

quotes = soup.find_all('span', class_='text')

for quote in quotes:

print(quote.text)

next_page = soup.find('li', class_='next')

url = next_page.find('a')['href'] if next_page else None

time.sleep(1) # Throttle requests by adding a delayEthical Considerations

Web scraping must be performed ethically. Here are some guidelines to follow:

- Respect

robots.txt: Always check and follow the rules specified in therobots.txtfile of the website you are scraping. - Do Not Overload Servers: Make requests responsibly to avoid putting excessive load on the server.

- Respect Privacy and Terms of Service: Ensure that scraping does not violate the website’s terms of service or privacy policies.

- Use Data Responsibly: Use the scraped data ethically, without infringing on privacy or intellectual property rights.

Conclusion

Web scraping with BeautifulSoup is a powerful tool for extracting data from websites. With its simple syntax and powerful features, BeautifulSoup makes it easy to navigate and parse HTML documents. By understanding the basics of making requests, parsing HTML, and extracting information, you can start scraping web content efficiently.

Advanced techniques such as handling pagination and dealing with JavaScript-rendered content expand the capabilities of your scrapers, while ethical considerations ensure your activities are responsible and respectful. Whether you’re gathering data for research, business, or personal projects, BeautifulSoup is an indispensable tool in your Python toolkit.